While great advances are being made in Artificial Intelligence (AI), some scientists have forewarned us of the risks of a super-intelligent AI that may be difficult to control. Making use of theoretical calculations, an international team of researchers, inclusive of scientists from the Center for Humans and Machines at the Max Planck Institute for Human Development showed in their research the unlikelihood of controlling a super-intelligent AI. Their study was published in the Journal of Artificial Intelligence Research.

While great advances are being made in Artificial Intelligence (AI), some scientists have forewarned us of the risks of a super-intelligent AI that may be difficult to control. Making use of theoretical calculations, an international team of researchers, inclusive of scientists from the Center for Humans and Machines at the Max Planck Institute for Human Development showed in their research the unlikelihood of controlling a super-intelligent AI. Their study was published in the Journal of Artificial Intelligence Research.

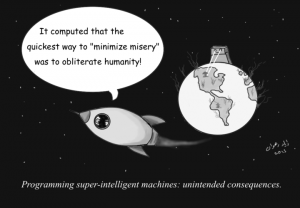

For example, if someone programs an AI system with intelligence superior to that of humans so it could have an independent education. When linked to the internet, data of all humanity will be accessible to the AI. It would have the power to substitute all existing programs and overpower all the online presences of machines globally. It is debatable which it would produce― a utopia or dystopia.

Scientists and philosophers have asked themselves if it would be possible to control a super-intelligent AI, to make sure it doesn’t harm humanity. Using theoretical calculations tried to show the possibility of controlling a super-intelligent AI.

‘A super-intelligent machine that controls the world sounds like science fiction. But there are already machines that perform certain important tasks independently without programmers fully understanding how they learned it. The question therefore arises whether this could at some point become uncontrollable and dangerous for humanity’, study co-author Manuel Cebrian says. He is also the Leader of the Digital Mobilization Group at the Center for Humans and Machines, Max Planck Institute for Human Development.

Scientists studied two ideas on how a super-intelligent AI could be controlled. For the first one, the super-intelligent AI’s capabilities could be restricted, by sealing it off from the internet and other devices to prevent contact with the outside world. But this would severely render the AI powerless, making it even unable to answer humanities questions. Not having that option, the AI could then be motivated to pursue goals to better humanity, like programming ethical principles into it. Albeit, the researchers also show that these modern and historical ideas for the control of a super-intelligent AI have their restrictions.

In their study, the team proposed a theoretical containment algorithm which makes sure a super-intelligent AI won’t harm people by simulating the behavior of the AI and stopping it if considered harmful. However, careful examination shows that in our current paradigm of computing, that kind of algorithm cannot be built.

‘If you break the problem down to basic rules from theoretical computer science, it turns out that an algorithm that would command an AI not to destroy the world could inadvertently halt its own operations. If this happened, you would not know whether the containment algorithm is still analyzing the threat, or whether it has stopped to contain the harmful AI. In effect, this makes the containment algorithm unusable’, says Iyad Rahwan, Director of the Center for Humans and Machines.

Based on these calculations, the containment problem is incomputable, i.e. no single algorithm can find a solution to determine if an AI could harm the world. Furthermore, the researchers illustrate that we may not even be aware when super-intelligent machines arrive, because deciding whether a machine demonstrates intelligence superior to humans is in the same circle with the containment issue.

The study ‘Superintelligence cannot be contained: Lessons from Computability Theory’ was published in the Journal of Artificial Intelligence Research. Some other researchers in the study include Andres Abeliuk from the University of Southern California, Manuel Alfonesca from the Autonomous University of Madrid, Antonio Fernandez Anta from the IMDEA Networks Institute and Lorenzo Coviello.

By Marvellous Iwendi.

Source: Max Planck Institute